New algorithms locate where a video was shot from its images

Source: Xavier Sevillano

Eight randomly chosen keyframes of videos contained in the video/sound geotagged database used in this research (credit: Xavier Sevillano et al.)

Eight randomly chosen keyframes of videos contained in the video/sound geotagged database used in this research (credit: Xavier Sevillano et al.)

Researchers from the Ramón Llull University (Spain) have created a system capable of geolocating some videos by comparing their images and audio with a worldwide multimedia database, for cases where textual metadata is not available or relevant.

In the future, this could help to find people who have gone missing after posting images on social networks, or even to recognize locations of terrorist executions by organizations such as ISIS.

All of the data obtained is merged together and grouped in clusters so that, using computer algorithms developed by the researchers, they can be compared with those of a large collection of recorded videos already geolocated around the world.

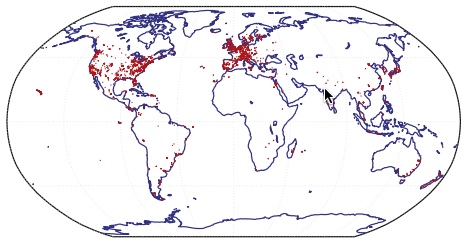

Geographical distribution of the 9660 videos of the geotagged database used in this research (each dot represents a video) (credit: Xavier Sevillano et al./Information Sciences)

In their study, published in the journal Information Sciences, the team used almost 10,000 sequences as a reference from the MediaEval Placing task audiovisual database, a benchmarking assessment of algorithms for multimedia content to detect the most probable geographical coordinates.

This allows for locating 3% of videos within a ten-kilometer radius of their actual geographical location, and in 1% of cases it is accurate to one kilometer ― four times more accurately than achieved previously, according to the researchers.

Another application for the system is to facilitate geographical browsing in video libraries such as YouTube. For example, the search for “Manhattan” (the city) could be disambiguated from “The Manhattans” 70s band and the Woody Allen film “Manhattan,” the researchers suggest.

The audiovisual geotagging system architecture (credit: Xavier Sevillano et al.)

The researchers recognize that their method will require a much larger audiovisual database to apply it to the millions of videos on the Internet.

Abstract of Look, listen and find: A purely audiovisual approach to online videos geotagging

Tagging videos with the geo-coordinates of the place where they were filmed (i.e. geotagging) enables indexing online multimedia repositories using geographical criteria. However, millions of non geotagged videos available online are invisible to the eyes of geo-oriented applications, which calls for the development of automatic techniques for estimating the location where a video was filmed. The most successful approaches to this problem largely rely on exploiting the textual metadata associated to the video, but it is quite common to encounter videos with no title, description nor tags. This work focuses on this latter adverse scenario and proposes a purely audiovisual approach to geotagging based on audiovisual similarity retrieval, modality fusion and cluster density. Using a subset of the MediaEval 2011 Placing task data set, we evaluate the ability of several visual and acoustic features for estimating the videos location both separately and jointly (via fusion at feature and at cluster level). The optimally configured version of the proposed system is capable of geotagging videos within 1 km of their real location at least 4 times more precisely than any of the audiovisual and visual content-based participants in the MediaEval 2011 Placing task.

| }

|