Claimed Breakthrough Slays Classic Computing Problem; Encryp

Source: Tom Simonite

On Tuesday, University of Chicago professor László Babai presented a new algorithm that simplifies a long-standing, difficult problem in computer science.

On Tuesday, University of Chicago professor László Babai presented a new algorithm that simplifies a long-standing, difficult problem in computer science.

A professor’s claim to have created an algorithm that dramatically simplifies one of theoretical computer science’s most notorious problems has experts preparing to reconsider a long-established truth of their field. It is also seen as a reminder that similar algorithmic breakthroughs are possible that could weaken the tough-to-crack problems at the heart of the cryptography protecting the world’s digital secrets.

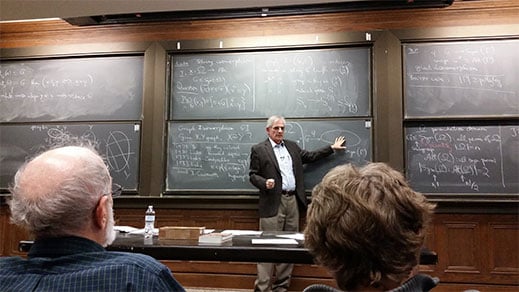

In a packed lecture theater on Tuesday and Thursday this week, University of Chicago professor László Babai gave the first two of a series of three lectures describing his new solution to a problem called graph isomorphism. The problem asks a computer to determine whether two different graphs―in the sense of a collection of “nodes” connected into a network, like a social graph―are in fact different representations of the same thing.

Babai’s lectures have caused excitement because graph isomorphism is known as a very challenging problem believed for more than 30 years to be not too far from the very hardest class of problems for computers to solve. If Babai is right, it is in fact much closer to falling into the class of problems that can be solved efficiently by computers, a category known as P (see “What Does ‘P vs. NP’ Mean for the Rest of Us?”).

“This has caught everyone’s imagination because the improvement is so large,” says Richard Lipton, a professor who works on theoretical computer science at Georgia Institute of Technology. “He got it down to a much lower class.” After MIT associate professor Scott Aaronson heard about Babai’s claim, he blogged that it could be “the theoretical computer science result of the decade.”

Computer scientists measure the difficulty of a problem by looking at how much the computational resources an algorithm needs to solve it grow as the size of the initial problem is increased. Graph isomorphism is considered extremely difficult because the best known algorithm needs roughly exponentially more resources as the size of the graphs it was working on increases.

That algorithm was published in 1983 by Babai with Eugene Luks of the University of Oregon. Babai claims that his new algorithm experiences a much less punishingly steep increase in resources as the graphs it is working on get larger, giving graph isomorphism a major difficulty downgrade.

Babai declined an interview request saying that publicity would be inappropriate before he has fully described his result in a paper and it has been verified by his peers. He told one excited attendee of his first talk that a preprint would be coming “soon, soon.”

Lipton says that caution is advised anytime a breakthrough is claimed without a full paper being available, but that Babai’s reputation means many people in this area of research think his result is likely real. “We’re talking about a stellar researcher,” says Lipton.

If Babai’s result is confirmed, there won’t be many ripples outside the rarefied world of experts on computational complexity. Graph isomorphism can crop up in some practical situations, for example in searching databases of molecular structures, which can be represented as graphs. But there are workarounds to having to solve the problem in all possible forms, as Babai’s algorithm does.

A similar breakthrough to what Babai claims on a problem ranked with similar computational complexity as graph isomorphism would have more significant repercussions. Known as factoring―decomposing a number into all of its factors―it underpins the most widely used encryption for securing stored data and information moving over the Internet.

The best known algorithm for factoring is currently placed in a similar difficulty class to the one graph isomorphism has resided in. Factoring lacks some mathematical features that may have enabled Babai’s result. But Lipton says his claim is a reminder that new algorithms can overturn long-held orthodoxies about these kinds of problems.

“If somebody figures out how to make that go really fast, even theoretically, that would be very upsetting to cryptographers,” he says. Existing encryption can be broken using significant computing power if the numbers used to seed the cryptographic algorithms were not large enough. An algorithmic breakthrough for factoring might change what is practical for government agencies with access to major computing power.

Even if a better algorithm for factoring weren’t practical, it would be cause for some major soul searching, says Lipton. “It would be harder to stand up in front of a room and say ‘My cryptography is secure because it uses factoring.’”

| }

|