Artificial Intelligence: 10 Things To Know

Source: Thomas Claburn

Andrew Moore, Dean of Carnegie Mellon's School of Computer Science, talks about artificial intelligence, robotics, and the future of education.

Andrew Moore, Dean of Carnegie Mellon's School of Computer Science, talks about artificial intelligence, robotics, and the future of education.

Artificial intelligence (AI) is already widely used in software and online services and it is becoming more common, thanks to ongoing progress in machine learning algorithms and a variety of related technologies. But popular depictions of the technology as software-based sentience -- artificial consciousness -- obscure what AI is and how it's actually deployed.

Earlier this month, Andrew Moore, dean of Carnegie Mellon's School of Computer Science, spoke with InformationWeek about AI and the growing role it is playing in people's lives. Moore sees a bright future for artificial intelligence, along with a number of challenges. Here are 10 insights from our conversation.

AI is a fancy calculator

Artificial intelligence should not be confused with human intelligence. Moore said that when he explains AI to students, he points out that the world artificial is in there for a reason. "We are trying to make a system which at first sight looks like it might be behaving in some manner that we might ascribe to intelligence," said Moore. "Everything, however, with 'artificial' in the label is actually just a really, really, really fancy calculator, all the way from chess programs to software in cars, to credit-scoring systems, to systems that are monitoring pharmaceutical sales for signs of an outbreak."

There are two broad areas of AI research

The first, said Moore, is autonomy. "This is about the science of making systems that can survive without humans in the loop, and can be useful even without getting instructions from the people who created them," said Moore. The second has to do with augmenting human capabilities, through services like Apple's Siri. "The idea is that we all currently have, and are going to have much, much more of, the notion of a concierge-type system that's whispering in our ears to help us make better decisions in our lives," said Moore.

Presently, we're too worried about Skynet

Skynet, the malevolent artificial intelligence that threatens humanity in the Terminator movies, isn't a realistic fear at the moment, despite the concerns voiced by a few tech luminaries. Moore said he believes we overestimate our potential to create artificial entities capable of self-directed action. "The idea of building a robot or a software system which, like a human, has got a real notion of its goals being to just generally survive and maybe reproduce, no one has any idea how to do that," he said. "It's real science fiction. It's like asking researchers to start designing a time machine."

Extrapolating current optimization algorithms and statistical reasoning into the future does not lead to artificial, self-directed agents, he said. Moore acknowledged the possibility that those conducting research on simulating living brains could achieve some breakthrough. But he estimated that 98% of AI researchers are focused on engineering systems that can help people make better decisions.

AI will save lives

In the US alone, said Moore, there are more than a billion search engine queries every day, and perhaps 5% of them come from people who are puzzled, uncertain, or worried about their health. "They're asking for advice about some drug or advice about some symptom or these kinds of things," said Moore. "And people are making bad decisions, which are costing huge numbers of lives every year, by not going to physicians under some circumstances or not letting a doctor know about something important or mismanaging their medications.

"And the kind of simple artificial intelligence which just processes information and makes sure that you're getting relevant information about your current situation is going to save a lot of lives. Just the fact that the whole population will start to act like it's surrounded by very smart advisors in healthcare, law, and education, that could be a wonderful thing for how our lives will be in the future."

Half the cars on the road will be self-driving by 2029

"Although we could get there much sooner, there will be huge regulatory issues and technology issues before it happens," said Moore, adding that it makes sense to be skeptical about such predictions because many technical obstacles have yet to be overcome. "Driving on a busy city street, where there are pedestrians and double-parked vehicles, is an unsolved problem, no matter what anyone might tell you."

AI has yet to be reconciled with liability

"Some of our professors are now in active conversations with senior leaders at insurance companies, just because the whole question of what insurance means in the future going to be very different," said Moore.

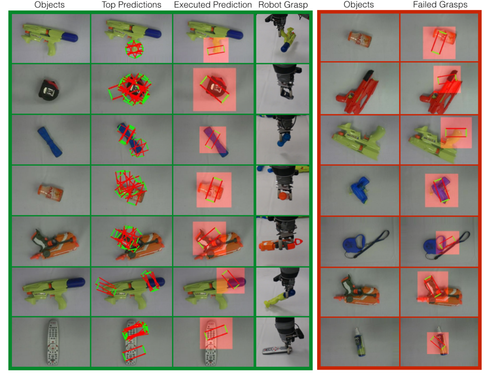

Robot grasping test results (Image: Carnegie Mellon University)

AI still can't deal with objects very well

"In robotics, we have done a fantastic job engineering eyes and ears, and even noses for robots, and we're done a fantastic job of making them mobile," said Moore. "We still suck at manipulation." At CMU, and other institutions conducting robotics research, there's considerable effort directed at bridging this gap.

As an example, Moore pointed to the work of CMU assistant professor Abhinav Gupta, who has been trying to train a robot called Baxter to manipulate objects by handling them over and over and over. "It's really cool, and it's kind of ghostly to see this robot that's doomed forever to be picking things up, shaking them, moving them, to build up more data about what physical things are like to interact with," said Moore.

Privacy is a big deal

At CMU, there are seven or eight faculty members pursuing privacy-related research, Moore estimated. Fifteen years ago, he said, that number would probably be zero, or close to it. "That's not only because it's right [to be concerned about privacy], but because the large companies that will eventually be deploying these kinds of things -- companies like Google, Microsoft, and Amazon -- they would never agree to any technology on a large scale if it damaged privacy," said Moore.

AI can improve privacy

People have a hard time understanding privacy policies, but software can provide clarity and verification, particularly through automated auditing and compliance systems. Moore pointed to the work of CMU associate professor Jason Hong, who created Privacygrade.org, a website that contains data on how over 1 million Android apps handle privacy, based on an analysis of the APIs used within the apps.

He also highlighted the work of associate professor Anupam Datta, who has used code to prove that Microsoft's Bing search engine does not leak data. "If you look around all the universities at what areas they're hiring in, you're going to see that privacy protection, especially something called disclosure limitation, is the number one area," said Moore.

AI will change teaching

Moore said he believes that online education will lead to a few hundred superstar professors who excel at imparting information to students in a way that's entertaining and effective. "The ones who do the best job will be the ones who get used by millions of students," he said. "But there's still a need for personal interaction, for actually working with the students ... So I think these professors [who aren't superstar lecturers] will not actually be sleeping under bridges."

| }

|