'Galileo,' MIT's new A.I. system, could help robots he

Source: Katherine Noyes

Computers can be taught to understand many things about the world, but when it comes to predicting what will happen when two objects collide, there's just nothing like real-world experience.

Computers can be taught to understand many things about the world, but when it comes to predicting what will happen when two objects collide, there's just nothing like real-world experience.

That's where Galileo comes in. Developed by MIT's Computer Science and Artificial Intelligence Lab (CSAIL), the new computational model has proven to be just as accurate as humans are at predicting how real-world objects move and interact.

Ultimately, it could help robots predict events in disaster situations and help humans avoid harm.

Humans learn from their earliest days -- often through bumps, bruises and painful experience -- how physical objects interact. Computers, however, don't have the benefit of that early training.

To make up for that lack, CSAIL researchers created Galileo, a system that can train itself using a combination of real-world videos and a 3D physics engine to infer the physical properties of objects and predict the outcome of a variety of physical events.

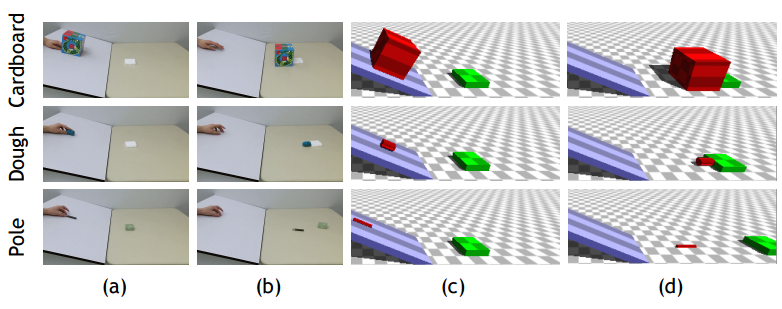

To train Galileo, the researchers used a set of 150 videos depicting physical events involving objects made from 15 different materials, including cardboard, foam, metal and rubber. Equipped with that training, the model could generate a data set of objects and their various physical properties.

They then fed the model information from Bullet, a 3D physics engine often used to create special effects for movies and video games. By taking the key elements of a given scene and then physically simulating it forward in time, Bullet served as a "reality check" against Galileo’s hypotheses, MIT said.

Finally, the team developed deep-learning algorithms that allow the model to teach itself to further improve its predictions.

To test Galileo’s predictive powers, the team pitted it against human subjects to predict a series of simulations, one of which can be seen in an online demo.

In one simulation, for example, users first see a collision involving a 20-degree inclined ramp; they're then shown the first frame of a video with a 10-degree ramp and asked to predict whether the object will slide down the surface.

"Interestingly, both the computer model and human subjects perform this task at chance and have a bias at saying that the object will move,” said Ilker Yildirim, who was lead author alongside CSAIL PhD student Jiajun Wu on a paper describing the research. "This suggests not only that humans and computers make similar errors, but provides further evidence that human scene understanding can be best described as probabilistic simulation.”

The paper was presented last month at the Conference on Neural Information Processing Systems in Montreal, MIT announced on Monday.

Eventually, the researchers hope to extend the work to more complex scenarios, with an eye toward applications in robotics and artificial intelligence.

“Imagine a robot that can readily adapt to an extreme physical event like a tornado or an earthquake,” said coauthor Joseph Lim. “Ultimately, our goal is to create flexible models that can assist humans in settings like that, where there is significant uncertainty.”

| }

|