Searching Images Reimagined

Source: R. Colin Johnson

Flora identifies the flower in Chinese and English by using the core of National Taiwan University's mobile visual search engine which can be used to search by face, photo or any visual criteria.

(Source: NTU, used with permission)

Image search engines are used for all sorts of purposes, usually to identify people, but they can also sense almost any visual criteria, as with flora-identification apps that identify flowers in photos. Pooling funding from Intel, MediaTek, Microsoft Research, Quanta Research, HTC and others, Professor Winston Hsu at National Taiwan University (NTU) has been expanding image search engines for the past four years into multimedia data analytics, large-scale image/video retrieval, and scalable and mobile visual recognition chips for various projects.

Hsu has begun applying his image recognition engine using large-scale analytics in an attempt to keep pace with the ultra-high resolution of today's digital cameras. To demonstrate the usefulness of his image searching algorithms developed at NTU, Hsu and students put together a flower identification program called Flora to be put in the iTunes App Store (this was in 2014, however, so don't go looking for it—Hsu had to take it down and is currently looking for a partner company to keep it updated with each new OS release).

The photos below demonstrate the Flora app.

Other novel applications of multimedia data analytics includes mining the demographics—gender, age, race—of social media users visiting different locations, travel paths for personalized travel recommendations and even the slightest differences in the color of facial skin to sense heart-rate to deduce the "worry level" of suspicious characters for applications like homeland security.

"To find the 1,000 words that every picture is worth, even the semantic concepts being conceived must be understood," Hsu told EE Times in an exclusive interview.

His facial image search engine can now almost instantly search through a half-million photos by indexing them in advance, identifying where in each image the faces are located, what gender, age, race and other criteria determined ahead of time and applied to each image.

He also invented a search engine in his lab, with the cooperation of his many doctoral candidates, to retrieval photos of people from sketches of them. From there he began building three-dimensional (3-D) model indexing and building 3-D databases. Now he can search through patents and other novel data using semantic search methods such as "search by impression."

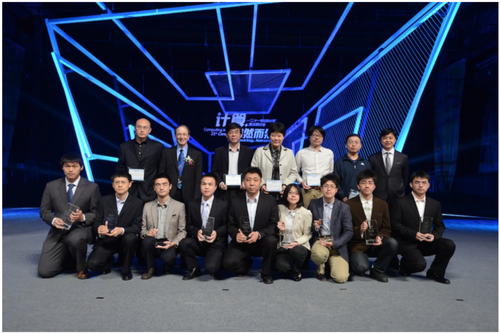

Search engines built by him and his students have taken first place in Microsoft Research's annual Bing Image Retrieval Challenge by coming up with the correct image in under 12 seconds (see photo) using neural networks to train on supplied image databases, then being tested on novel new image databases. His group also won an award in "New York 360 Degree", hosted by IBM's T.J. Watson Research Center and sponsored by the Association for Computing Machinery (ACM) as a Multimedia Grand Challenge Multimodal contest.

Doctoral candidate Yin-Hsi Kuo (4th, from right, the first row) was the only female among the 10 winners, and the only one from Taiwan to be awarded a Microsoft Research Asia Fellowship.

(Source: NTU, used with permission)

Hsu's latest research, in cooperation with the University of of Rochester (New York) identifies styles, such as clothing styles that link to all sorts of applications, from e-commerce for "dressing like stars" to surveillance systems for security in order to identify suspicious clothing styles. For instance, according to Hsu, authorities began using similar technology after its terrorist "pressure cooker" bombing during the annual Boston Marathon in 2013.

"We need to understand the semantics [meaning] of images for commerce as well as for security," Hsu told us.

With a semantic understanding of images, commerce questions like "do fashion shows really influence street-chic" could be answered quantitatively by detecting real correlations.

"I received mail from stars about that one," Hsu told EETimes.

Today Hsu is using deep-learning neural networks of 20 layers or more to discover hidden correlations not possible to identify with conventional methods, funded by Intel and MediaTek. The search engine uses up to 57 million parameters and requires the use of graphic processing units (GPUs) to do the processing. However, his ultimate goal is to streamline the algorithms for execution on any smartphone, inside a vehicle, or even in surveillance cameras by using compression and removing redundancy. In one prototype, Hsu reduced the number of parameters from 57 million down to under four for compact fine-tuned image sets.

MediaTek is currently designing a chip to perform the heavy lifting in a small, low-power, form factor that could be ready for handsets in three to five years.

| }

|