Deep Learning On Babbage's Analytical Engine

Source: Mike James

analyticalnet

analyticalnet

Speculating on what might have happened if Babbage had built his Analytical Engine is fun, but did you ever think that a Victorian computer could implement a neural network and learn to read handwritten digits? Well it can and it's not a joke.

Charles Babbage's Analytical Engine was the first computer, or rather would have been if it had been completed a little over 200 years ago. A completely mechanical stored program computer might not seem impressive compared to a silicon implemented electronic machine, but a computer is a computer and in principle they can all do any computation.

I Programmer has previously pondered the question of how computer programming and artificial intelligence might have developed had Babbage persevered with his design to the point where it was realized in cogs and gears, see What if Babbage..? Now this theme has been extended to deep learning.

So how can you implement a deep neural network on a machine that doesn't exist?

The answer is that there is an Analytic engine simulator that runs the instruction set of the original machine. However, a neural network is a big computation and the engine has only 20kbytes of memory and a small instruction set. This didn't stop Adam P. Goucher as you can discover in the following video:

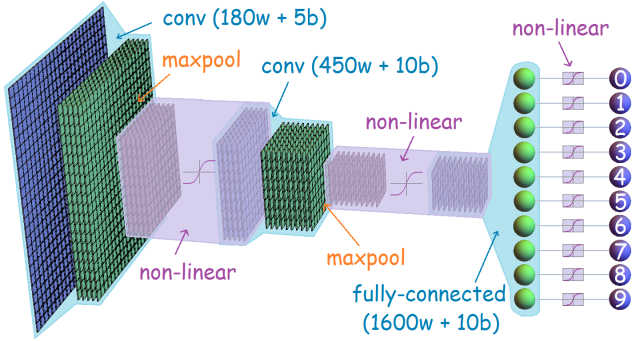

To make it possible a convolutional neural network was used - they have fewer weights and hence are actually simpler than a general network.

Bash and Python scripts were used to generate the analytical engine code - each line of code corresponding to a punched card of the sort used in a Jacquard loom. As pointed out in Goucher's blog post, the 412,663 lines of code needed would correspond to a stack of cards as tall as the Burj Khalifa in Dubai. This is not going to be an easy program to run on the real analytical engine.

So did it work?

After training on 20,000 handwritten digit images the results of testing on 10,000 new images it was achieving a 96.31% accuracy.

So Babbage could have made AI possible in Victorian times?

Not really.

The time it would have taken to process the 412,663 cards is possibly several centuries.

The point is that we could have optimized it and possibly...

Perhaps not.

It is a wonderful achievement to demonstrate that the most 21st century of algorithms would have been at home on a 19th century computer.

Perhaps the simulator needs to be better known. Analytical Engine Hackathon anyone?

| }

|