Moral machines

Source: Doug Johnson

In 2005 I wrote:

George Dyson in his article "Turing's Cathedral" (November 24, 2005), quotes a Google employee as saying about the Google Book Seach (formerly Google Print) project:

"We are not scanning all those books to be read by people... We are scanning them to be read by an AI."

I have to admit, this gave me the willies. Should it? Is there an AI equivalent to Asimov's Three Laws of Robotics?

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

I'd sleep better knowing that someone a whole lot smarter than I am is thinking this through. Will AIs be the benign helpmates envisioned by Ray Kurzweil in The Age of Spiritual Machines or the nemesis of humanity described in Dan Simmon's science fiction novels of Hyperion or Clarke's 2001?

Is my friendly little PowerBook about to say to me: "I'm sorry, Doug. I'm afraid I can't do that."?

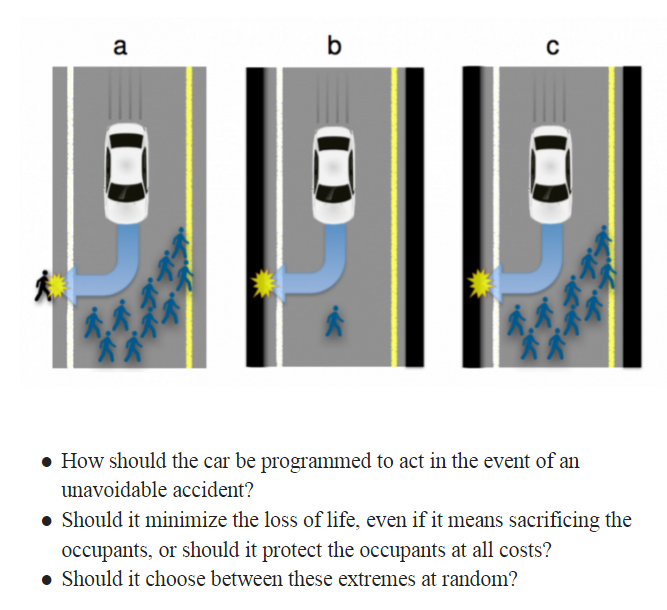

I thought of this post when our district instructional technology coordinator used the following problem she saw at this summer's ISTE conference as the grounding activity for a tech meeting (source see above.)

No one in our tech department staff felt she/he had a good answer to this dilemma.

Were a human behind the wheel in a situation where lives were at stake, I suspect most of us would act instinctively, rather than rationally, with the primary instinct being survival. But with AI, we now have the power to have truly altruistic decisions made. Would the car's moral sensor deduce that the loss of my single life was preferable to the loss of multiple people? Or younger people? Or smarter people? An ethical case could certainly be made...

There was a lot of talk about coding for all students at the ISTE conference this year. But my take-aways were more about the ethical side of technology: How do we assure equity? Are our search algorithms racially biased? Does technology discriminate against the poor and powerless?

As what Kurzweil calls "the singularity" gets ever closer, when machine intelligence become powerful enough to teach itself, coding seems rather weak tea. Should we be paying less attention to if-then's and more attention right-wrong's in our computing activities?

| }

|