AI and the Ghost in the Machine

Source: Cameron Coward

The concept of artificial intelligence dates back far before the advent of modern computers — even as far back as Greek mythology. Hephaestus, the Greek god of craftsmen and blacksmiths, was believed to have created automatons to work for him. Another mythological figure, Pygmalion, carved a statue of a beautiful woman from ivory, who he proceeded to fall in love with. Aphrodite then imbued the statue with life as a gift to Pygmalion, who then married the now living woman.

The concept of artificial intelligence dates back far before the advent of modern computers — even as far back as Greek mythology. Hephaestus, the Greek god of craftsmen and blacksmiths, was believed to have created automatons to work for him. Another mythological figure, Pygmalion, carved a statue of a beautiful woman from ivory, who he proceeded to fall in love with. Aphrodite then imbued the statue with life as a gift to Pygmalion, who then married the now living woman.

Throughout history, myths and legends of artificial beings that were given intelligence were common. These varied from having simple supernatural origins (such as the Greek myths), to more scientifically-reasoned methods as the idea of alchemy increased in popularity. In fiction, particularly science fiction, artificial intelligence became more and more common beginning in the 19th century.

But, it wasn’t until mathematics, philosophy, and the scientific method advanced enough in the 19th and 20th centuries that artificial intelligence was taken seriously as an actual possibility. It was during this time that mathematicians such as George Boole, Bertrand Russel, and Alfred North Whitehead began presenting theories formalizing logical reasoning. With the development of digital computers in the second half of the 20th century, these concepts were put into practice, and AI research began in earnest.

Over the last 50 years, interest in AI development has waxed and waned with public interest and the successes and failures of the industry. Predictions made by researchers in the field, and by science fiction visionaries, have often fallen short of reality. Generally, this can be chalked up to computing limitations. But, a deeper problem of the understanding of what intelligence actually is has been a source a tremendous debate.

Despite these setbacks, AI research and development has continued. Currently, this research is being conducted by technology corporations who see the economic potential in such advancements, and by academics working at universities around the world. Where does that research currently stand, and what might we expect to see in the future? To answer that, we’ll first need to attempt to define what exactly constitutes artificial intelligence.

Weak AI, AGI, and Strong AI

You may be surprised to learn that it is generally accepted that artificial intelligence already exists. As Albert (yes, that’s a pseudonym), a Silicon Valley AI researcher, puts it: “…AI is monitoring your credit card transactions for weird behavior, AI is reading the numbers you write on your bank checks. If you search for ‘sunset’ in the pictures on your phone, it’s AI vision that finds them.” This sort of artificial intelligence is what the industry calls “weak AI”.

Weak AI

Weak AI is dedicated to a narrow task, for example Apple’s Siri. While Siri is considered to be AI, it is only capable of operating in a pre-defined range that combines a handful a narrow AI tasks. Siri can perform language processing, interpretations of user requests, and other basic tasks. But, Siri doesn’t have any sentience or consciousness, and for that reason many people find it unsatisfying to even define such a system as AI.

Albert, however, believes that AI is something of a moving target, saying “There is a long running joke in the AI research community that once we solve something then people decide that it’s not real intelligence!” Just a few decades ago, the capabilities of an AI assistant like Siri would have been considered AI. Albert continues, “People used to think that chess was the pinnacle of intelligence, until we beat the world champion. Then they said that we could never beat Go since that search space was too large and required ‘intuition’. Until we beat the world champion last year…”

Strong AI

Still, Albert, along with other AI researchers, only defines these sorts of systems as weak AI. Strong AI, on the other hand, is what most laymen think of when someone brings up artificial intelligence. A Strong AI would be capable of actual thought and reasoning, and would possess sentience and/or consciousness. This is the sort of AI that defined science fiction entities like HAL 9000, KITT, and Cortana (in Halo, not Microsoft’s personal assistant).

Artificial General Intelligence

What actually constitutes a strong AI and how to test and define such an entity is a controversial subject full of heated debate. By all accounts, we’re not very close to having strong AI. But, another type of system, AGI (Artificial General Intelligence), is a sort of bridge between weak AI and strong AI. While AGI wouldn’t possess the sentience of a Strong AI, it would be far more capable than weak AI. A true AGI could learn from information presented to it, and could answer any question based on that information (and could perform tasks related to it).

While AGI is where most current research in the field of artificial intelligence is focused, the ultimate goal for many is still strong AI. After decades, even centuries, of strong AI being a central aspect of science fiction, most of us have taken for granted the idea that a sentient artificial intelligence will someday be created. However, many believe that this isn’t even possible, and a great deal of the debate on the topic revolves around philosophical concepts regarding sentience, consciousness, and intelligence.

Consciousness, AI, and Philosophy

This discussion starts with a very simple question: what is consciousness? Though the question is simple, anyone who has taken an Introduction to Philosophy course can tell you that the answer is anything but. This is a question that has had us collectively scratching our heads for millennia, and few people who have seriously tried to answer it have come to a satisfactory answer.

What is Consciousness?

Some philosophers have even posited that consciousness, as it’s generally thought of, doesn’t even exist. For example, in Consciousness Explained, Daniel Dennett argues the idea that consciousness is an elaborate illusion created by our minds. This is a logical extension of the philosophical concept of determinism, which posits that everything is a result of a cause only having a single possible effect. Taken to its logical extreme, deterministic theory would state that every thought (and therefore consciousness) is the physical reaction to preceding events (down to atomic interactions).

Most people react to this explanation as an absurdity — our experience of consciousness being so integral to our being that it is unacceptable. However, even if one were to accept the idea that consciousness is possible, and also that oneself possesses it, how could it ever be proven that another entity also possesses it? This is the intellectual realm of solipsism and the philosophical zombie.

Solipsism is the idea that a person can only truly prove their own consciousness. Consider Descartes’ famous quote “Cogito ergo sum” (I think therefore I am). While to many this is a valid proof of one’s own consciousness, it does nothing to address the existence of consciousness in others. A popular thought exercise to illustrate this conundrum is the possibility of a philosophical zombie.

Philosophical Zombies

A philosophical zombie is a human who does not possess consciousness, but who can mimic consciousness perfectly. From the Wikipedia page on philosophical zombies: “For example, a philosophical zombie could be poked with a sharp object and not feel any pain sensation, but yet behave exactly as if it does feel pain (it may say “ouch” and recoil from the stimulus, and say that it is in pain).” Further, this hypothetical being might even think that it did feel the pain, though it really didn’t.

As an extension of this thought experiment, let’s posit that a philosophical zombie was born early in humanity’s existence that possessed an evolutionary advantage. Over time, this advantage allowed for successful reproduction and eventually conscious human beings were entirely replaced by these philosophical zombies, such that every other human on Earth was one. Could you prove that all of the people around you actually possessed consciousness, or if they were just very good at mimicking it?

This problem is central to the debate surrounding strong AI. If we can’t even prove that another person is conscious, how could we prove that an artificial intelligence was? John Searle not only illustrates this in his famous Chinese room thought experiment, but further puts forward the opinion that conscious artificial intelligence is impossible in a digital computer.

The Chinese Room

The Chinese room argument as Searle originally published it goes something like this: suppose an AI were developed that takes Chinese characters as input, processes them, and produces Chinese characters as output. It does so well enough to pass the Turing test. Does it then follow that the AI actually “understood” the Chinese characters it was processing?

Searle says that it doesn’t, but that the AI was just acting as if it understood the Chinese. His rationale is that a man (who understands only English) placed in a sealed room could, given the proper instructions and enough time, do the same. This man could receive a request in Chinese, follow English instructions on what to do with those Chinese characters, and provide the output in Chinese. This man never actually understood the Chinese characters, but simply followed the instructions. So, Searle theorizes, would an AI not actually understand what it is processing, it’s just acting as if it does.

An illustration of the Chinese room, courtesy of cognitivephilosophy.net

It’s no coincidence that the Chinese room thought exercise is similar to the idea of a philosophical zombie, as both seek to address the difference between true consciousness and the appearance of consciousness. The Turing Test is often criticized as being overly simplistic, but Alan Turing had carefully considered the problem of the Chinese room before introducing it. This was more than 30 years before Searle published his thoughts, but Turing had anticipated such a concept as an extension of the “problem of other minds” (the same problem that’s at the heart of solipsism).

Polite Convention

Turing addressed this problem by giving machines the same “polite convention” that we give to other humans. Though we can’t know that other humans truly possess the same consciousness that we do, we act as if they do out of a matter of practicality — we’d never get anything done otherwise. Turing believed that discounting an AI based on a problem like the Chinese room would be holding that AI to a higher standard than we hold other humans. Thus, the Turing Test equates perfect mimicry of consciousness with actual consciousness for practical reasons.

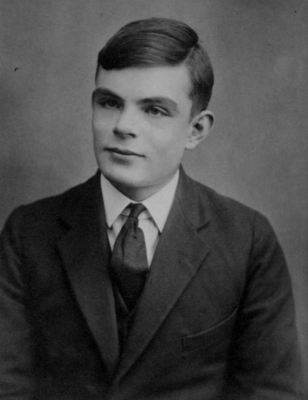

Alan Turing, creator of the Turing Test and the “polite convention” philosophy

This dismissal of defining “true” consciousness is, for now, best to philosophers as far as most modern AI researchers are concerned. Trevor Sands (an AI researcher for Lockheed Martin, who stresses that his statements reflect his own opinions, and not necessarily those of his employer) says “Consciousness or sentience, in my opinion, are not prerequisites for AGI, but instead phenomena that emerge as a result of intelligence.”

Albert takes an approach which mirrors Turing’s, saying “if something acts convincingly enough like it is conscious we will be compelled to treat it as if it is, even though it might not be.” While debates go on among philosophers and academics, researchers in the field have been working all along. Questions of consciousness are set aside in favor of work on developing AGI.

History of AI Development

Modern AI research was kicked off in 1956 with a conference held at Dartmouth College. This conference was attended by many who later become experts in AI research, and who were primarily responsible for the early development of AI. Over the next decade, they would introduce software which would fuel excitement about the growing field. Computers were able to play (and win) at checkers, solve math proofs (in some cases, creating solutions more efficient than those done previously by mathematicians), and could provide rudimentary language processing.

Unsurprisingly, the potential military applications of AI garnered the attention of the US government, and by the ’60s the Department of Defense was pouring funds into research. Optimism was high, and this funded research was largely undirected. It was believed that major breakthroughs in artificial intelligence were right around the corner, and researchers were left to work as they saw fit. Marvin Minsky, a prolific AI researcher of the time, stated in 1967 that “within a generation … the problem of creating ‘artificial intelligence’ will substantially be solved.”

Unfortunately, the promise of artificial intelligence wasn’t delivered upon, and by the ’70s optimism had faded and government funding was substantially reduced. Lack of funding meant that research was dramatically slowed, and few advancements were made in the following years. It wasn’t until the ’80s that progress in the private sector with “expert systems” provided financial incentives to invest heavily in AI once again.

Throughout the ’80s, AI development was again well-funded, primarily by the American, British, and Japanese governments. Optimism reminiscent of that of the ’60s was common, and again big promises about true AI being just around the corner were made. Japan’s Fifth Generation Computer Systems project was supposed to provide a platform for AI advancement. But, the lack of fruition of this system, and other failures, once again led to declining funding in AI research.

Around the turn of the century, practical approaches to AI development and use were showing strong promise. With access to massive amounts of information (via the internet) and powerful computers, weak AI was proving very beneficial in business. These systems were used to great success in the stock market, for data mining and logistics, and in the field of medical diagnostics.

Over the last decade, advancements in neural networks and deep learning have led to a renaissance of sorts in the field of artificial intelligence. Currently, most research is focused on the practical applications of weak AI, and the potential of AGI. Weak AI is already in use all around us, major breakthroughs are being made in AGI, and optimism about artificial intelligence is once again high.

Current Approaches to AI Development

Researchers today are investing heavily into neural networks, which loosely mirror the way a biological brain works. While true virtual emulation of a biological brain (with modeling of individual neurons) is being studied, the more practical approach right now is with deep learning being performed by neural networks. The idea is that the way a brain processes information is important, but that it isn’t necessary for it to be done biologically.

Neural networks use simple nodes connected to form complex systems [Photo credit: Wikipedia]

As an AI researcher specializing in deep learning, it’s Albert’s job to try to teach neural networks to answer questions. “The dream of question answering is to have an oracle that is able to ingest all of human knowledge and be able to answer any questions about this knowledge” is Albert’s reply when asked what his goal is. While this isn’t yet possible, he says “We are up to the point where we can get an AI to read a short document and a question and extract simple information from the document. The exciting state of the art is that we are starting to see the beginnings of these systems reasoning.”

Trevor Sands does similar work with neural networks for Lockheed Martin. His focus is on creating “programs that utilize artificial intelligence techniques to enable humans and autonomous systems to work as a collaborative team.” Like Albert, Sands uses neural networks and deep learning to process huge amounts of data intelligently. The hope is to come up with the right approach, and to create a system which can be given direction to learn on its own.

Albert describes the difference between weak AI, and the more recent neural network approaches “You’d have vision people with one algorithm, and speech recognition with another, and yet others for doing NLP (Natural Language Processing). But, now they are all moving over to use neural networks, which is basically the same technique for all these different problems. I find this unification very exciting. Especially given that there are people who think that the brain and thus intelligence is actually the result of a single algorithm.”

Basically, as an AGI, the ideal neural network would work for any kind of data. Like the human mind, this would be true intelligence that could process any kind of data it was given. Unlike current weak AI systems, it wouldn’t have to be developed for a specific task. The same system that might be used to answer questions about history could also advise an investor on which stocks to purchase, or even provide military intelligence.

| }

|