As artificial intelligence grows, so do perceived threats to human uniqueness

Source: Rosalie Chan

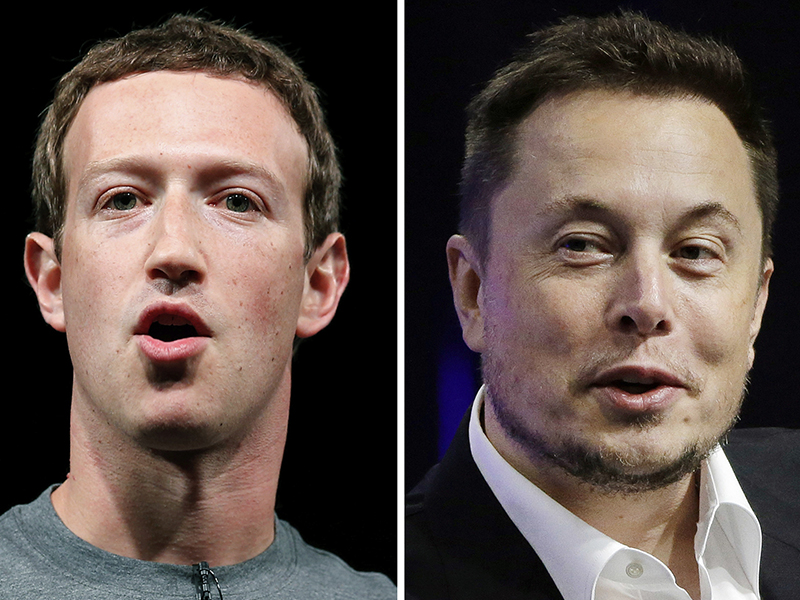

SpaceX and Tesla CEO Elon Musk got into a spat recently on Twitter with Facebook’s Mark Zuckerberg over the dangers of artificial intelligence.

SpaceX and Tesla CEO Elon Musk got into a spat recently on Twitter with Facebook’s Mark Zuckerberg over the dangers of artificial intelligence.

Musk urged a group of governors to proactively regulate AI, which he views as a “fundamental risk to the existence of human civilization.”

“Until people see robots going down the street killing people, they don’t know how to react because it seems so ethereal,” Musk said.

Zuckerberg shot back, saying fearmongering about AI is “irresponsible.”

The two divergent views on AI reflect the existential questions humans face about their uniqueness in the universe.

Today, robots are quickly populating our cultural landscape. Engineers are building robots that can converse, perform dangerous tasks and even have sex.

Like Musk, people may see robots as a threat, especially as some become increasingly humanlike.

Tesla and SpaceX CEO Elon Musk responds to a question by Nevada Republican Gov. Brian Sandoval during the closing plenary session, titled “Introducing the New Chairs Initiative – Ahead,” on the third day of the National Governors Association’s meeting on July 15, 2017, in Providence, R.I. (AP Photo/Stephan Savoia)

Even the appearance of humanlike robots causes many people discomfort. This phenomenon, called “the uncanny valley,” is a hypothesis proposed in 1970 by Japanese roboticist Masahiro Mori, and according to researchers, this discomfort stems from some existential questions about the nature of humanity.

This hypothesis says that the more humanlike something is, the more comfortable we feel with it. But this comfort level suddenly dips when the object closely resembles a human.

Researchers have corroborated this hypothesis, and many factors contribute to it. For one, these humanlike robots remind us of our own mortality.

“They contain both life and the appearance of life,” said Karl MacDorman, associate professor in the Human-Computer Interaction program of Indiana University. “It reminds us that at some point, we could be inanimate after death.”

What’s more, the idea that robots may have a consciousness and become almost indistinguishable from humans disturbs some, as recent movies such as “Ex Machina” and “Her” attest. The possibility that humans are not unique opens up questions about the nature of humanity.

Philosophers such as Daniel Dennett describe humans as nothing but complicated robots made of flesh. But Jews, Christians and Muslims believe humans are made in God’s image, the apex of God’s created order.

“I think particularly in the Christian tradition and Jewish tradition there’s this concept of Imago Dei, which means we are created in the image and likeness of God,” said Brent Waters, Christian ethics professor at Garrett-Evangelical Theological Seminary. “To try to create something unique that God created may also be a form of idolatry.”

People from cultures that attach spiritual significance to trees or stones may have an easier time with robots.

Karl MacDorman. Photo courtesy of Indiana University

MacDorman points out that Japanese society, which is both Shinto and Buddhist, has a general tendency to be more accepting of robots, including humanlike ones. For example, robots interact with customers in department stores, and engineers have built companion robots for families and the elderly.

On the other hand, followers of Abrahamic religions tend to be more disturbed by robots that bridge the gap between the human and inanimate.

“I would say they make us uncomfortable because they’re different,” said techno-theologian and futurist Christopher Benek, associate pastor at First Presbyterian Church of Fort Lauderdale, Fla. “They’re creepy. They’re off somehow. But from a theological standpoint, we are special because we are loved by God. I think it’s really important for us to continue to wrestle with it. We’re challenged by something that might be able to have more power than we have.”

The human sense of self is also grounded in biology. Even newborns show signs they distinguish their bodies as unique, said Philippe Rochat, psychology professor at Emory University who recently worked on a study verifying the uncanny valley.

“Identity in being unique is a necessary ingredient for us to move forward in the world and adapt to the world,” Rochat said. “This is how the mind works. It works to create meaning. … We have to fundamentally distinguish ourselves as other entities in the world.”

And while people might get used to humanlike robots, the uncanny valley is still a perceptual instinct.

“People can have a very uncanny feeling even if they’re exposed for a 10th of a second,” MacDorman said. “There is a conflict, and our brain is immediately detecting the problem and the error signal.”

This instinct comes from the conflict between us imagining emotions in robots and knowing these robots are inanimate objects, said Catrin Misselhorn, director of the Institute of Philosophy at the University of Stuttgart in Germany. Humans empathize when they perceive that another human is about to produce an emotion.

“If we see a real person as opposed to a robot, we infer that this face has thoughts, emotions, feelings and physically has a mental state,” Rochat said. “We have an automatic inference of mental state. You dehumanize someone when you reduce this inference or eliminate this inference of mental state in this humanlike entity.”

Although robots cannot produce or even show emotions, humans involuntarily imagine that robots can experience emotions or pain.

The fact that humans can feel empathy for humanlike robots raises ethical implications, Misselhorn said. For example, service robots may try to persuade people to buy things, but the fact that robots can be almost humanlike may be manipulated in more malicious ways.

While artificial intelligence may benefit human’s lives in many ways, setting limits for robots and drawing the line between humans and machines has increasingly become relevant in government meetings. In the future, governments may have to clearly define robot rights.

“The fact that we feel empathy with inanimate objects makes us prone to some types of manipulation so we should think about where we want humanlike robots and where we don’t want them,” Misselhorn said.

Still, artificial intelligence can be used for the good of humanity, said Benek. If AI gains a humanlike intelligence, robots may even be able to practice religion.

“I don’t see anywhere biblically where it prohibits us from creating, as long as it’s in accordance with God’s will,” Benek said. “I think if they’re truly autonomous and significantly more intelligent than we are currently, to me, it makes us want to advocate for the good of humanity and the Earth and creation.”

| }

|