Online hate speech could be contained like a computer virus

Source: University of Cambridge

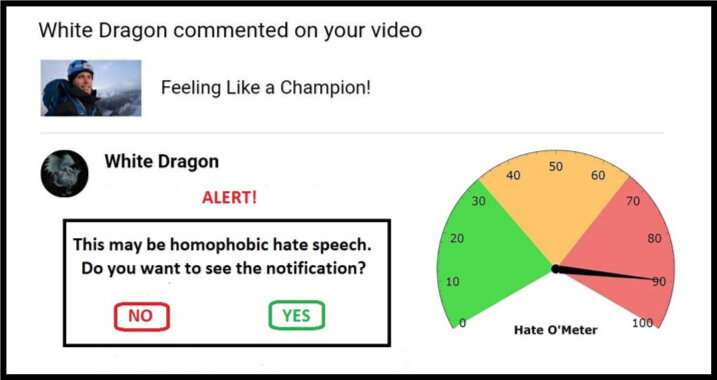

Example of a possible approach for a quarantine screen, complete with Hate O'Meter. Credit: Stefanie Ullman

Example of a possible approach for a quarantine screen, complete with Hate O'Meter. Credit: Stefanie Ullman

The spread of hate speech via social media could be tackled using the same "quarantine" approach deployed to combat malicious software, according to University of Cambridge researchers.

Definitions of hate speech vary depending on nation, law and platform, and just blocking keywords is ineffectual: graphic descriptions of violence need not contain obvious ethnic slurs to constitute racist death threats, for example.

As such, hate speech is difficult to detect automatically. It has to be reported by those exposed to it, after the intended "psychological harm" is inflicted, with armies of moderators required to judge every case.

This is the new front line of an ancient debate: freedom of speech versus poisonous language.

Now, an engineer and a linguist have published a proposal in the journal Ethics and Information Technology that harnesses cyber security techniques to give control to those targeted, without resorting to censorship.

Cambridge language and machine learning experts are using databases of threats and violent insults to build algorithms that can provide a score for the likelihood of an online message containing forms of hate speech.

As these algorithms get refined, potential hate speech could be identified and "quarantined". Users would receive a warning alert with a "Hate O'Meter"—the hate speech severity score—the sender's name, and an option to view the content or delete unseen.

This approach is akin to spam and malware filters, and researchers from the 'Giving Voice to Digital Democracies' project believe it could dramatically reduce the amount of hate speech people are forced to experience. They are aiming to have a prototype ready in early 2020.

"Hate speech is a form of intentional online harm, like malware, and can therefore be handled by means of quarantining," said co-author and linguist Dr. Stefanie Ullman. "In fact, a lot of hate speech is actually generated by software such as Twitter bots."

"Companies like Facebook, Twitter and Google generally respond reactively to hate speech," said co-author and engineer Dr. Marcus Tomalin. "This may be okay for those who don't encounter it often. For others it's too little, too late."

"Many women and people from minority groups in the public eye receive anonymous hate speech for daring to have an online presence. We are seeing this deter people from entering or continuing in public life, often those from groups in need of greater representation," he said.

| }

|