EventDrop: a method to augment asynchronous event data

Source: Ingrid Fadelli

An example of augmented events with EventDrop. For better visualization, the event frame representation is used to visualize the outcome of augmented events. Credit: Gu et al.

An example of augmented events with EventDrop. For better visualization, the event frame representation is used to visualize the outcome of augmented events. Credit: Gu et al.

Event sensors, such as DVS event cameras and NeuTouch tactile sensors, are sophisticated bio-inspired devices that mimic event-driven communication mechanisms naturally occurring in the brain. In contrast with conventional sensors, such as RGB cameras, which are designed to synchronously capture a scene at a fixed rate, event sensors can capture changes (i.e., events) occurring in a scene asynchronously.

For instance, DVS cameras can capture changes in luminosity over time for individual pixels, rather than collecting intensity images, as conventional RGB cameras would. Event sensors have numerous advantages over conventional sensing technologies, including a higher dynamic range, a higher temporal resolution, a lower time latency and a higher power efficiency.

Due to their numerous advantages, these bio-inspired sensors have become the focus of numerous research studies, including studies aimed at training deep learning algorithms to analyze event data. While many deep learning methods have been found to perform well on task that involve the analysis of event data, their performance can significantly decline when they are applied to new data (i.e., not the data they were originally trained on), a problem known as overfitting.

Researchers at Chongqing University, National University of Singapore, the German Aerospace Center and Tsinghua University recently created EventDrop, a new method to augment asynchronous event data and limit the adverse effects of overfitting. This method, introduced in a paper pre-published on arXiv and set to be presented at the International Joint Conference on Artificial Intelligence 2021 (IJCAI-21) in July, could improve the generalization of deep learning models trained on event data.

"A challenging problem in deep learning is overfitting, which means that a model may exhibit excellent performance on training data, and yet degrade dramatically in performance when validated against new and unseen data," Fuqiang Gu, one of the researchers who developed EventDrop, told TechXplore. "A simple solution to the overfitting problem is to significantly increase the amount of labeled data, which is theoretically feasible, but may be cost-prohibitive in practice. The overfitting problem is more severe in learning with event data since event datasets remain small relative to conventional datasets (e.g., ImageNet)."

Data augmentation is known to be an effective technique to generate artificial data and improve the ability of deep learning models to generalize well when applied to new datasets. Examples of augmentation techniques for image data include translation, rotation, flipping, cropping, shearing and changing contrast/sharpness.

Strategies used by EventDrop, where t indicates time dimension, x denotes the pixel coordinate (only one dimension is shown here for clarity). Black dots represent original events, and blue dots are the events to be dropped. Red dashed lines represent threshold borders. (a) Original events that are triggered asynchronously. (b) Random drop strategy. (c) Drop by time strategy. (d) Drop by area strategy. Credit: Gu et al.

Event data differs significantly from frame-like data (e.g., static images). Therefore, augmentation techniques developed for frame-like data typically cannot be used to augment asynchronous event data as well. With this in mind, Gu and his colleagues created EventDrop, a new technique specifically designed to augment asynchronous event data.

"Our work was motivated by two observations," Gu said. "The first is that the output of event cameras for the same scene under the same lighting condition may vary significantly over time. This may be because event cameras are somehow noisy, and events are usually triggered when the change about the scene reaches or surpasses a threshold. By randomly dropping a proportion of events, it is possible to improve the diversity of event data and hence increase the performance of downstream applications."

The second observation that inspired the development of EventDrop is that when completing certain tasks on real data, such as object recognition and tracking, the scenes in images processed by deep learning algorithms can be partially occluded. Therefore, the ability of machine learning algorithms to generalize well across different data highly depends on the diversity of the data their trained on in terms of occlusion.

In other words, training data should ideally contain images with varying degrees of occlusion. Unfortunately, however, most available training datasets have limited variance in terms of occlusion.

"A machine learning model trained on the data with limited or no (totally visible) occlusion variance may generalize poorly on new samples that are partially occluded," Gu explained. "By generating new samples that simulate partially occluded cases, the model is able to better recognize objects with partial occlusion."

EventDrop works by 'dropping' events selected with various strategies to increase the diversity of training data (e.g., simulating different levels of occlusion). To 'drop' events, it employs three strategies, called random drop, drop by time and drop by area. The first strategy prepares the model for noisy event data, while the other two strategies simulate occlusion in images.

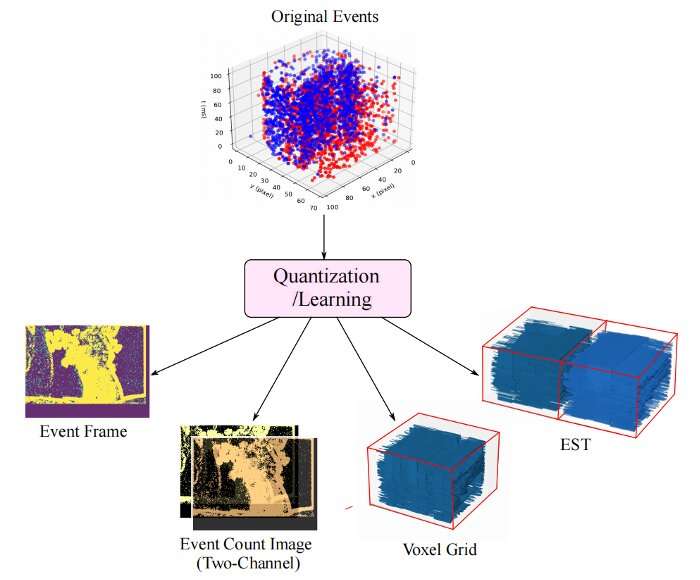

General framework for converting events into popular event representations. The original asynchronous events can be transformed into frame-like data through quantization or learning (e.g., neural networks). Credit: Gu et al.

"The basic goal of random drop is to randomly drop a proportion of events in the sequence, to overcome the noise originating from event sensors," Gu said. "Drop by time is to drop events triggered within a random period of time, by trying to increase the diversity of training data, stimulating the case that objects are partially occluded during certain time period. Finally, drop by area drops events triggered within a randomly selected pixel area, while also trying to improve the diversity of data by simulating various cases in which some parts of objects are partially occluded."

The technique is easy to implement and computationally low-cost. Moreover, it does not require any parameter learning, thus it can be applied to various tasks that involve the analysis of event data.

"To the best of our knowledge, EventDrop is the first method that augments asynchronous event data by dropping events," Gu said. "It can work with event data and deals with both sensor noise and occlusion. By dropping events selected with various strategies, it can increase the diversity of training data (e.g., to simulate various levels of occlusion)."

EventDrop can significantly improve the generalization of deep learning algorithms across different event datasets. In addition, it can enhance event-based learning in both deep neural networks (DNNs) and spiking neural networks (SNNs).

The researchers evaluated EventDrop in a series of experiments using two different event datasets, known as N-Caltech101 and N-Cars. They found that by dropping events, their method could significantly improve the accuracy of different deep neural networks on object classification tasks, for both the datasets they used.

"While in our paper we showed the application of our approach for event-based learning with deep nets, it can be also applied to learning with SNNs," Gu said. "In our future work, we will apply our approach to other event-based learning tasks for improving the robustness and reliability, such as visual inertial odometry, place recognition, pose estimation, traffic flow estimation, and simultaneous localization and mapping."

| }

|