A neural network-based optimization technique inspired by the principle of annea

Source: Ingrid Fadelli

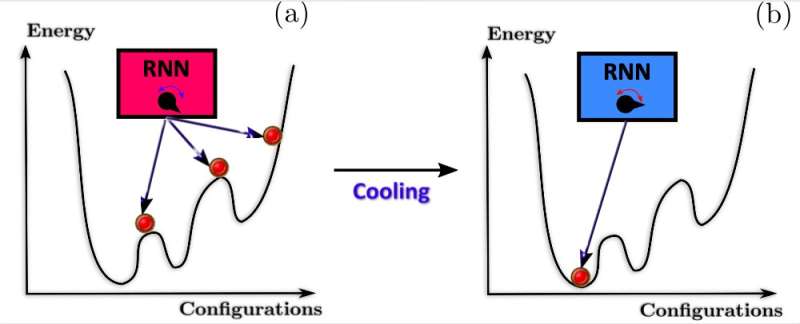

A picture illustrating the use of a recurrent neural network (RNN) for the purpose of finding the lowest point in an optimization problem through classical annealing. (a) Initially at high temperatures, the hot RNN (in red) explores the landscape, defined by an optimization problem of interest, by making smarter moves through the space of configurations. After cooling temperature during annealing, the cold RNN in figure (b) points out to the lowest energy configuration in the rugged landscape. Credit: Hibat-Allah et al.

A picture illustrating the use of a recurrent neural network (RNN) for the purpose of finding the lowest point in an optimization problem through classical annealing. (a) Initially at high temperatures, the hot RNN (in red) explores the landscape, defined by an optimization problem of interest, by making smarter moves through the space of configurations. After cooling temperature during annealing, the cold RNN in figure (b) points out to the lowest energy configuration in the rugged landscape. Credit: Hibat-Allah et al.

Optimization problems involve the identification of the best possible solution among several possibilities. These problems can be encountered in real-world settings, as well as in most scientific research fields.

In recent years, computer scientists have developed increasingly advanced computational methods for solving optimization problems. Some of the most promising techniques developed so far are based on artificial neural networks (ANNs).

Researchers at the Vector Institute, University of Waterloo and Perimeter Institute for Theoretical Physics in Canada have recently developed variational neural annealing, a new optimization method that merges recurrent neural networks (RNNs) with the principle of annealing. This new technique, introduced in a paper published in Nature Machine Intelligence, works by generalizing the distribution of the possible solutions to a given problem using a parametrized model.

"The topic of our recent research is at the intersection between machine learning, statistical physics and quantum physics," Mohamed Hibat-Allah, one of the researchers who carried out the study, told TechXplore. "More specifically, it aims to solve real-world optimization through a new algorithm based on the theory of annealing and RNNs borrowed from the field of natural language processing (NLP)."

The idea for this recent paper originated during a series of conversations between Hibat-Allah and his collaborators. Ultimately, the researchers set out to create a new algorithm that would outperform existing optimization methods based on both classical and quantum annealing principles.

"At the time, I was teaching at a school in Bogotá with Juan Carrasquilla and Roger Melko," Estelle M. Inack, another researcher involved in the study, told TechXplore. "During one of ours chats, Juan suggested me the idea of using annealing in a variational Monte Carlo set-up. When we returned to Waterloo, he put me in touch with Mohamed, his Ph.D. student at the time. This is how our project started."

Some of the most difficult optimization problems are known to be nondeterministic polynomial time (NP)-hard problems. Essentially, this means that they are highly complex and either cannot be solved using simple computational methods or solving them would require enormous amounts of time.

As simple algorithms cannot effectively tackle these problems, researchers worldwide have been trying to create more efficient techniques that could solve them within realistic timescales. The approach created by Hibat-Allah, Inack and their colleagues is one of the most recent efforts aimed at addressing optimization problems more efficiently.

"The framework we presented is based on the principle of annealing," Hibat-Allah explained. "The latter is inspired from annealing in metallurgy, where one can heat a material and let it cool down in a slow manner to bring it to a lower energy state that is more robust and more stable. This process has inspired the invention of simulated annealing, which aims to find numerical solutions to optimization problems."

The most characteristic feature of the optimization method introduced by this research group is that it merges the efficiency and computational power of ANNs with the advantages of simulated annealing techniques. More specifically, Hibat-Allah, Inack and their colleagues used RNNs, a class of algorithms that have been found to be particularly promising for NLP applications. While in NLP studies these algorithms are trained to process human language, the researchers re-purposed them and trained them to solve optimization problems.

"In simple terms, if you think of an optimization problem as a rugged landscape filled with valleys, the classical version of annealing aims to find the lowest point in the landscape by jumping through barriers using thermal fluctuations," Hibat-Allah said. "On the other hand, the quantum version of annealing attempts to solve this problem by digging tunnels through the barriers for the hope of finding deeper valleys."

Using RNNs, Hibat-Allah, Inack and their colleagues found that they were able to solve optimization problems more efficiently. In fact, in contrast with more conventional numerical implementations of annealing, their RNN-based method made smarter choices, improving the efficiency of both classical and quantum annealing methods.

"We demonstrated the ability to encode the annealing paradigm with autoregressive networks and the performance that is derived with respect to standard simulated classical and quantum annealing is the most important achievement of our study," Inack said. "Our work takes optimization problem solving to a new dimension that directly exploits the infrastructures used to train advanced neural networks, via rapid iteration using for example TensorFlow or Pytorch accelerated on GPUs / TPUs."

Hibat-Allah, Inack and their colleagues evaluated their approach in a series of tests, comparing its performance with that of standard annealing optimization methods based on numerical simulations. Their framework outperformed all the techniques they compared it to on different prototypical optimization problems. In the future, the algorithm introduced by this team of researchers could be applied to numerous real-world optimization problems, helping specialists in a variety of fields to solve these problems more efficiently.

"Our recent paper resulted in a patent filing," Inack said. "My plan is to use the framework we developed at my newly created startup, yiyaniQ, to achieve faster and more accurate derivative pricing calculations."

In their next studies, the researchers plan to test their algorithm's performance on more realistic problems, while also comparing it with that of other state-of-the-art optimization techniques. In addition, they hope to develop their technique further, by substituting some of its components or integrating additional ones.

"It would be also interesting to improve our method by using more advanced neural network architectures or by choosing different cooling schemes during annealing," Hibat-Allah added. "We do not know yet how much improvement we can get, but we can learn a lot through these investigations and potentially find a better algorithm that can improve current solutions to optimization problems."

| }

|