AI rules: From three to twenty three

Source: C. Raja Mohan

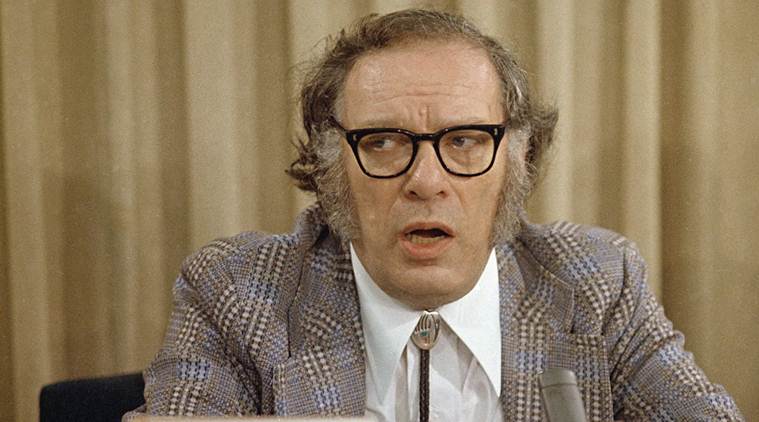

Isaac Asimov (Source: AP photo)

Isaac Asimov (Source: AP photo)

Many moons ago, in 1942, when intelligent computers began to figure in science fiction, Isaac Asimov laid down three rules for building robots: they should do no harm to humans either through action or inaction; must always obey human command; and be able to secure their own existence so long as this does not violate the first two rules.

As computing power grows rapidly and begins to envelop many aspects of our life, there has been a growing concern that Asimov Rules, sensible as they are, may not be adequate for the 21st century. Scientists like Stephen Hawking and high tech entrepreneurs like Elon Musk have sounded alarm bells about super intelligent computers ‘going rogue’ and dominating if not destroying human civilization.

Not everyone agrees that we are close to such a catastrophic moment. But given the pace of progress in AI development, the expanding scope for its application, and the growing intensity of the current research effort suggests that it may not be too soon to revisit and revise the Asimov commands on computing.

That precisely is what a large group of researchers, businessmen, lawyers and ethicists did last month when they produced a list of 23 recommendations for regulating the future of Artificial Intelligence. Endorsed by nearly 900 AI researchers, the principles would appear much too idealistic and vague.

Techno optimists will dismiss the code as a “Luddite manifesto’. Some will pick nits on the difficulty of defining such things as ‘human values’. Even more important the lawyers will say the guidelines are not enforceable. For all its limitations, the AI code does constitute a significant reflection on the extraordinary challenges posed by robotics and machine learning.

A critical proposition is that the goal of AI research should be the pursuit of ‘beneficial intelligence’ and not ‘undirected intelligence’. It ‘should benefit and empower as many people as possible’ and the prosperity generated by AI should be shared broadly’. The idea of shared benefits in the guidelines is matched by the proposition that investments into AI research should be accompanied by funding for research in law, ethics and social studies that will address the broader questions emerging out of advances in computing. It also calls for more cooperation, trust, and transparency among AI researchers and developers.

The new code also urges the AI community to avoid cutting corners on safety standards, as they compete with each other to reap the multiple commercial benefits from the emerging technology. The principles insist on verifiable safety of the AI systems throughout their operational life. The question of transparency in the systems is also considered critical. The code insists that if an AI system causes harm, ‘it should be possible to ascertain why.’

Ethics and values form an important dimension of the proposed AI code. It insists that the builders of AI systems are ‘stakeholders in the moral implications of their use and misuse’ and have the responsibility to design them to be ‘compatible with ideals of human dignity, rights, freedoms, and cultural diversity’.

The theme of human control over robots emerges as the biggest long-term challenge addressed by the AI code. In a reference to the threats to internal and international security threats posed by the new technologies, the code declares that AI should not be allowed to subvert human societies and urges nations to avoid an ‘arms race’ in the development of ‘lethal autonomous weapons’.

The code argues that AI could mark a ‘profound change in the history of life on earth’ and the catastrophic and existential risks that it entails should be managed with ‘commensurate care and resources’. Pointing to the possibility of machines learning on their own, producing their copies and acting autonomously, the code declares that AI systems designed to ‘self-improve or self-replicate in a manner that could lead to rapidly increasing quality or quantity must be subject to strict safety and control measures.’

Not everyone is pleased with the idea of a code of conduct for AI development. Some are apprehensive of the possibility of governments stepping in to over-regulate a technology that is poised for take off. They would agree that some kind of an oversight is needed and that AI itself might provide the appropriate solutions for effective human supervision.

At the international level, the major powers especially the United States, China and Russia are investing in a big way in AI to gain military and strategic advantages. As an expansive international discourse on AI unfolds, Delhi is uncharacteristically mute. For nearly seven decades, Delhi was always among the first movers of the global debates on the political, economic, social and ethical implications of technological revolutions. On the AI, though, Delhi has much catching up to do.

| }

|