Intel Proposes Its Embedded Processor Graphics For Real-Time Artificial Intellig

Source: Motek Moyen Motek Moyen

Summary

Summary

I was wrong to say Intel doesn’t need GPUs to compete with Nvidia on deep learning/artificial intelligence computing.

Further research revealed that Intel is also proposing its integrated processor graphics as a component along with FPGAs in deep learning inference computing.

Nvidia’s Pascal and Vega GPU monsters will always be the best for training deep learning computers. FPGA and Intel’s embedded Processor Graphics could be top hardware choices for inference computing.

Deep learning inference refers to the execution of knowledge/skills/datasets continuously trained out of deep learning computers or deep neural networks.

Accelerating inference computing is a very important component of the AI/deep learning ecosystem design. It helps deliver retail-time execution of deep learning-trained capabilities.

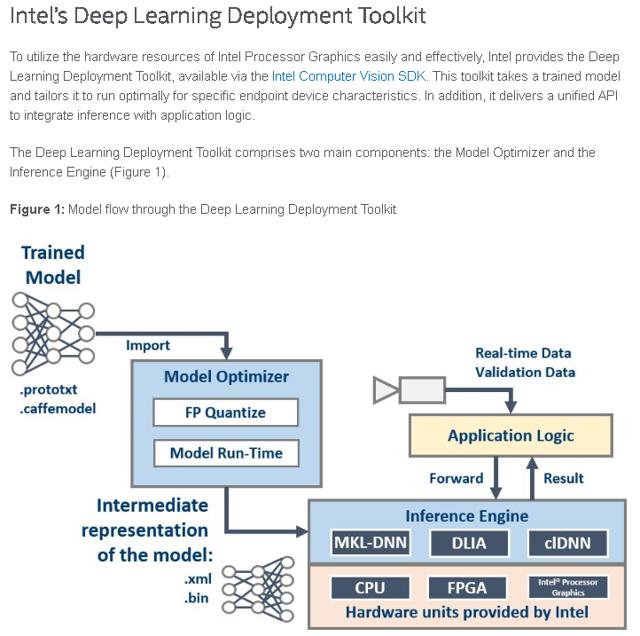

I was wrong to say that Intel (INTC) doesn’t need GPUs to compete with Nvidia (NVDA) on artificial intelligence/deep learning computing. Further research told me that along with FPGA (Field Programmable Gate Array), there’s an embedded Intel Processor Graphics for deep learning inference. It’s a new concept that was discussed by Intel only last May.

Nvidia’s GPU can be the Training Engine for deep learning computers. Intel’s FPGAs and embedded Processor Graphics could be the go-to hardware accelerators for inference computing. Unlike the Project BrainWave of Microsoft (which only relies on Altera’s (NASDAQ:ALTR) Stratix 10 FPGA to accelerate deep learning inference), Intel’s Inference Engine design uses integrated GPUs alongside FPGAs.

Aside from gaming, Intel now has another way to monetize the GPU IP/technology it licensed from Nvidia. Its expertise in integrated based graphics processing units [iGPU] and Altera’s FPGA IP could be a better inference acceleration design than Microsoft’s Project BrainWave. In short, Nvidia allowed Intel to compete better in deep learning inference acceleration.

| }

|