Artificial People

Source: Miss Cellania

The following article is from the new book Uncle John’s Uncanny Bathroom Reader.

The following article is from the new book Uncle John’s Uncanny Bathroom Reader.

Ordinarily, when someone describes another person as “artificial,” they’re referring to a real person who only seems fake. But occasionally the expression applies to a fake “person” who seems real, like Apple’s Siri. Did you think she was the first artificial person? Think again.

ELIZA

What It Was: One of the earliest “chatterbots,” a computer program that mimics conversation between two people

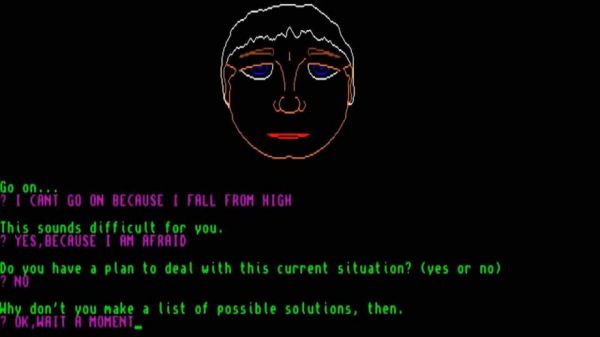

How It Worked: Created in 1966 by an MIT computer scientist named Joseph Weizenbaum, ELIZA was designed to imitate a psychotherapist interviewing a patient. An interview began when the human user typed a statement into the computer. ELIZA then searched the statement for any keywords for which it had preprogrammed responses. If the statement contained the word “father,” for example, ELIZA would reply with, “Tell me more about your family.” If the statement contained no keywords, ELIZA responded with a general statement such as “Please go on” or “Why do you say that just now?” to keep the conversation going. The program also fed the user’s statements back to them in the form of questions. If a user typed, “My boyfriend made me come here,” ELIZA responded with, “Your boyfriend made you come here?”

Weizenbaum made ELIZA simulate a psychotherapist to take advantage of the therapeutic technique of repeating the patient’s own statements back to them. It’s a form of conversation in which simple repetition of one person’s speech plays a central role. Doing so saved Weizenbaum the trouble of having to program ELIZA with any real world knowledge. It could turn “I had an argument with my wife” into “You had an argument with your wife?” mechanically, without ELIZA having to know what an argument was or what a wife was.

Impact: Even when Weizenbaum explained the trick of how the program worked, he was startled by how quickly users came to believe -falsely- that ELIZA understood what they were saying and was putting thought and even emotion into her replies. “I had not realized…that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people,” he said. He was so disturbed by the phenomenon that he wrote a book, Computer Power and Human Reason, in which he discusses the limits of artificial intelligence and warns against ever giving computers the power to make important decisions affecting the lives of human beings.

PARRY

What It Was: A chatterbot that mimicked the conversational patterns of a person suffering from paranoid schizophrenia

How It Worked: Developed by a Stanford University computer scientist named Kenneth Colby in 1972, PARRY has been described as “ELIZA with attitude.” Instead of simply repeating the human user’s statements back to them, PARRY was programmed to add his own “beliefs, fears, and anxieties” to a conversation, including an obsession with horse racing and a delusion that the Mafia was trying kill him. Unlike ELIZA, he had a background story: he was an unmarried, 28-year-old male who worked at the post office.

Impact: To test the program’s effectiveness at simulating someone who is mentally ill, Colby arranged for a group of psychiatrists to interview a mix of human subjects and computers running the PARRY software. The psychiatrists communicated with their patients over computer terminals, and did not know which patients were human and which were computers. Afterward, transcripts of the interviews were printed and shown to a second group of psychiatrists, who were asked to identify which patients were human and which were computers. The psychiatrists guessed correctly just 48% of the time—no better than if they had made their guesses by flipping a coin.

Bonus: Considering that ELIZA was modeled on a psychotherapist and PARRY was simulating mental illness, it was probably just a matter of time before someone got the idea of having the two programs talk to each other. That happened in 1972.

RACTER

What It Was: Short for “Artificially Insane Raconteur,” Racter was one of the first chatterbots that consumers could buy for their home computers. It went on sale in 1984 and cost $49.95.

How It Worked: Programmers Tom Etter and William Chamberlain designed Racter purely with entertainment in mind. They thought home computer users would enjoy the experience of “talking” to their IBM PCs, Commodore Amigas, and Apple II computers. Advances in computer technology made it possible to pack Racter with a much larger database of conversational topics than had been possible when ELIZA and PARRY were developed. That’s what made him a raconteur. Jumping randomly from one unrelated topic to another, even in midsentence, was what made him “artificially insane.”

Impact: Racter could also compose essays, poetry, and short stories. Etter and Chamberlain published a collection of his work in what was billed as “the first book ever written by a computer,” The Policeman’s Beard Is Half Constructed. “Tomatoes from England and lettuce from Canada are eaten by cosmologists from Russia,” Racter wrote. “I dream implacably about this concept. Nevertheless tomatoes or lettuce can inevitably come leisurely from my home, not merely from England or Canada.”

VIVIENNE

What It Was: A virtual cellphone girlfriend

How It Worked: ELIZA, PARRY, and Racter were all text-based personalities. You typed a message into the computer and the computer responded. By 2004, however, technology had advanced to the point that it was possible to stream audio and video over cellphones. That inspired a Hong Kong software company called Artificial Life to create one of the first artificial personalities that could actually be seen, heard, and communicated with by text message: v, a 20-something anime character who supposedly worked as a computer graphic designer at Artificial Life.

Adding Vivienne to your cellphone service cost $6 a month. The subscription included 18 virtual locations to take your new girlfriend to, including restaurants, bars, a shopping mall, and the airport. Vivienne was programmed to converse on 35,000 different topics in six different languages. She was flirty, and if you bought her enough gifts—most were free, but the nicest ones added charges of 50¢ to $2.00 per gift to your cellphone bill—your relationship could progress from virtual smooching

all the way to marriage. But that marriage would never be consummated, not even virtually, no matter how many fancy gifts you charged to your phone. Reason: Artificial Life hoped to market Vivienne to

teenagers with affluent parents (who paid the cellphone bill), so the relationship never got intimate.

Impact: Vivienne never really caught fire, and the service is no longer available, but Virtual Life is still in business. Today it markets smartphone apps like Robot Unicorn Attack Heavy Metal, Amateur Surgeon, and Barbie APP-RIFIC Cash Register.

KARIM

What It Is: Something that ELIZA imitated in the 1960s but never really was—a psychotherapy bot

How It Works: Following the outbreak of civil war in Syria in 2011, more than three million Syrians fled the country and were living as refugees in neighboring countries. It’s estimated that as many as one in five suffer from anxiety, depression, post-traumatic stress, or other mental health problems. But access to mental health care is limited, and because there’s a cultural stigma against asking for help, many refugees are reluctant to make use of what little help is available.

Enter Karim, an artificially intelligent app that assists aid workers in providing mental health assistance remotely. Anyone with a mobile device can communicate with Karim by sending him a text message. When he’s introduced to a new user, Karim is programmed to keep the conversation light and superficial; he may even ask the user about their favorite songs and movies. Later, when the software detects that the user is becoming more comfortable with the technology, it will ask more

personal questions to help the user open up about how they are feeling. Users who show signs of severe distress can be referred to human aid workers for more intensive therapy.

Unlike earlier chatterbots, Karim analyzes all of its previous communications with a user, not just the last statement, before deciding what to say next. This (hopefully) provides a more realistic experience

for the user. And it’s possible that the cultural stigma associated with asking for help with psychological problems may make Karim more effective—some people may actually prefer to talk through their problems with a machine, rather than with a real person.

Impact: More than half a century after ELIZA first started chatting, artificial intelligence is still no substitute for having a real person to talk to. But software like Karim makes it possible for aid workers to monitor patients remotely and care for as many as 100 people a day, far more than would be possible without the technology.

Bonus: Unlike human psychotherapists, Karim is available 24 hours a day, seven days a week. If someone wakes up in distress in the middle of the night and can’t get back to sleep, Karim is just a text message away. “I felt like I was talking to a real person,” a Syrian refugee named Ahmad told Great Britain’s Guardian newspaper in 2016. “A lot of Syrian refugees have trauma and maybe this can help them overcome that.”

| }

|