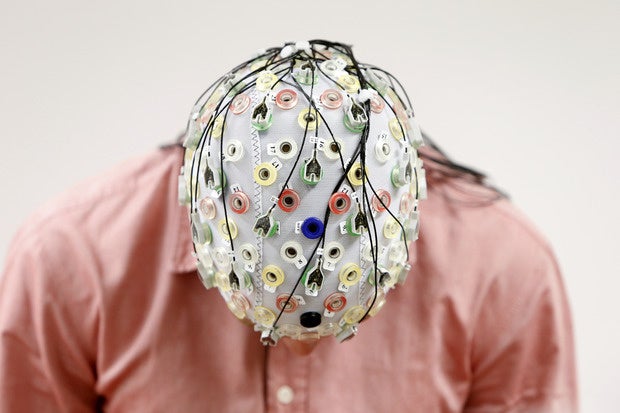

Intelligence agency wants computer scientists to develop bra

Source: Michael Cooney

If you are a computer scientist and have any thoughts on developing human brain-like functions into a new wave of computers, the researchers at the Intelligence Advanced Research Projects Activity want to hear from you.

If you are a computer scientist and have any thoughts on developing human brain-like functions into a new wave of computers, the researchers at the Intelligence Advanced Research Projects Activity want to hear from you.

IARPA, the radical research arm of the of the Office of the Director of National Intelligence this week said it was looking at two groups to help develop this new generation of computers: computer scientists with experience in designing or building computing systems that rely on the same or similar principles as those employed by the brain and neuroscientists who have credible ideas for how neural computing can offer practical benefits for next-generation computers.

From the IARPA request for information: …”the principles of computing underlying today's state of the art digital systems deviate substantially from the principles that govern computing in the brain. In particular, whereas mainstream computers rely on synchronous operations, high precision, and clear physical and conceptual separations between storage, data, and logic; the brain relies on asynchronous messaging, low precision storage that is co-localized with processing, and dynamic memory structures that change on both short and long time scales.”

The group breaks out a number of questions on specific topics it wants computer scientists in particular to offer answers to including:

Spike-based representations: Brains operate using spike-based codes that often appear sparse in time and across populations of neurons. In many systems, these codes appear noisy or imprecise, suggesting a plausible role for approximate computation in brain function.

What is the current state of research in the use of spike-based representations, sparse coding, and/or approximate computations for digital or analog computing systems?

Are there existing hardware systems that utilize representations similar to spikes? If so, in which application areas and use cases have these been deployed, and what are their performance characteristics?

Asynchronous computation: Brains do not employ a global clock signal to synchronously update all computing elements at once. Instead, neurons function independently by default, and only transiently coordinate their activity (e.g. when participating in a coherent cell assembly).

What is the current state of research in the use of asynchronous computation and/or transient coordination for digital or analog computing systems?

Are there existing hardware systems that utilize asynchronous computation and/or transient coordination? If so, in which application areas and use cases have these been deployed, and what are their performance characteristics?

Learning: Brains employ plasticity mechanisms that operate continuously and over multiple time scales to support online learning. Remarkably, brains continue to operate stably during ongoing plasticity.

What is the current state of research in the use of online learning over short and long time scales for digital or analog systems?

Are there existing hardware systems that utilize online learning over short and long time scales? If so, in which application areas and use cases have these been deployed, and what are their performance characteristics?

Co-local memory storage and computation: Brains do not strictly segregate memory and computing elements, as in the traditional von Neumann architecture (John von Neumann found inspiration for the design of the Electronic Discrete Variable Automatic Computer (EDVAC) in what was then known about the design of the brain �C 70 years ago, IARPA wrote). Rather, the synaptic inputs to a neuron can serve the dual role of storing memories and supporting computation.

What is the current state of research in the use of co-local memory storage and computation for digital or analog computing systems?

Are there existing hardware systems that utilize co-local memory storage and computation? If so, in which application areas and use cases have these been deployed, and what are their performance characteristics?

There is a similar set of questions for the neuroscientist world as well. You can read them all here.

This current RFI isn’t IARPA’s first dance with human brain research. Last year it announced a five-year program called Machine Intelligence from Cortical Networks (MICrONS) that offered participants a “unique opportunity to pose biological questions with the greatest potential to advance theories of neural computation and obtain answers through carefully planned experimentation and data analysis.”

Top News

google's autonomous car

Is Apple going to build an iCar?

150108 josh earnest

White House hopes for 'common ground' in Silicon Valley meeting

spam

Cisco disrupts another exploit kit

“Over the course of the program, participants will use their improving understanding of the representations, transformations, and learning rules employed by the brain to create ever more capable neurally-derived machine learning algorithms. Ultimate computational goals for MICrONS include the ability to perform complex information processing tasks such as one-shot learning, unsupervised clustering, and scene parsing. Ultimately, as performers incorporate these insights into successive versions of the machine learning algorithms, they will devise solutions that can perform complex information processing tasks aiming towards human-like proficiency,” IARPA stated.

IARPA said that “despite significant progress in machine learning over the past few years, today’s state of the art algorithms are brittle and do not generalize well. In contrast, the brain is able to robustly separate and categorize signals in the presence of significant noise and non-linear transformations, and can extrapolate from single examples to entire classes of stimuli.”

IARPA said that the rate of effective knowledge transfer between neuroscience and machine learning has been slow because of differing scientific priorities, funding sources, knowledge repositories, and lexicons. As a result, very few of the ideas about neural computing that have emerged over the past few decades have been incorporated into modern machine learning algorithms.

| }

|